Semantic Modeling

Initial Situation

Exploring and bringing together heterogeneous data is an ongoing process that has grown in importance over time. The need to link and integrate different data sources has been around for a long time. However, the increasing availability and use of disparate data sources has led to an increased focus on research and development of heterogeneous data processing methods in recent decades. In the 1990s, researchers began to focus more on the integration of heterogeneous data sources. Various approaches and techniques have been developed to address the challenges of processing, integrating, and analyzing heterogeneous data. With advances in database technology, the development of standards for data exchange, and the increasing importance of big data and data integration, research on heterogeneous data sources has evolved. Today, it is an active and dynamic research area. One research approach is semantic data management.

DRL for Job Shop Scheduling

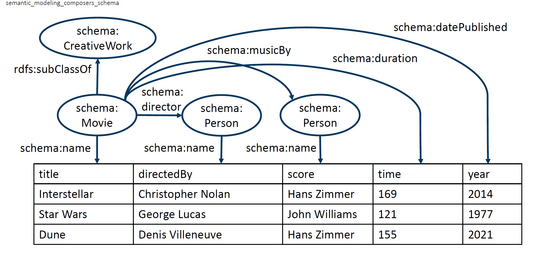

In the area of semantic data management, the use of shared conceptualizations such as knowledge graphs or ontologies has proven to be particularly effective for efficiently managing and consolidating heterogeneous data sources. For example, based on an existing ontology, all data attributes of the existing data sets are mapped to classes of this ontology. This process is called semantic tagging and enables the interpretation of data attributes in the context of the ontology. To obtain a fine-grained description of the data, the creation of a semantic model is the established approach.

However, the manual creation of semantic models requires expertise and is time-consuming. Today, there are several automated and semi-automated approaches to support the creation of a semantic model. They use various information from and about the datasets to be annotated (labels, data, metadata) to create semantic labels and complete semantic models. However, automated approaches are limited in their applicability to real-world scenarios for various reasons and therefore require manual post-processing. This post-processing is called semantic refinement. It is usually performed by domain experts, i.e. users who know the data very well, but usually have little or no knowledge of semantic technologies.

Our research addresses both challenges. We focus both on improved automatic generation of semantic labels and models using machine learning techniques, and on efficient and semi-automatic post-processing (e.g. using recommender systems) of automatically generated semantic models. Our overall goal is to make semantic modeling practical and scalable.

Schlably Framework

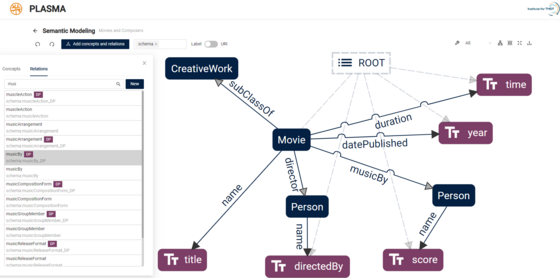

A key result of our research is the PLASMA framework. PLASMA is a tool for creating and editing semantic models that is primarily aimed at non-specialist users. We released the first version of this semantic modeling platform in 2021 and have continued to develop it. PLASMA provides an easy-to-use graphical user interface to lower the entry barrier for users with no experience in semantic modeling. PLASMA handles all interactions related to the modeling process, manages custom ontologies and a knowledge graph, and is able to analyze input data to identify its schema. In addition, the interfaces and libraries provided by PLASMA enable direct integration into data spaces. The underlying microservice architecture also allows the integration of various existing approaches to automated semantic modeling. PLASMA is open source and can be evaluated here.

Application

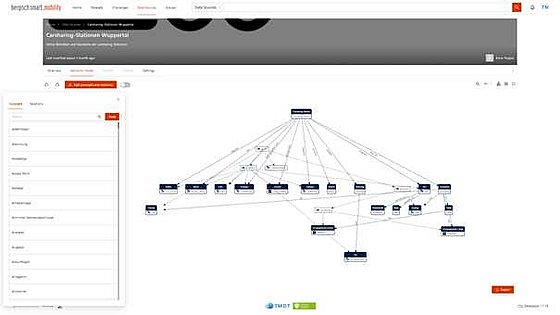

Our research is applied in all contexts dealing with semantic data management (discovery and integration of semantic data) or wherever mappings between concepts and data are needed. Today, this is especially the case in the areas of data spaces, the construction of knowledge graphs, and data management according to the FAIR principles.